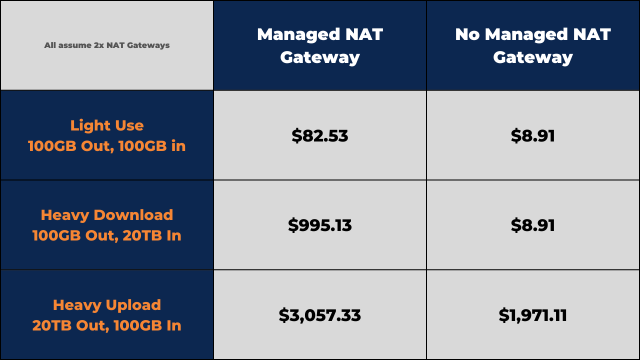

Since 2015, we’ve been gifted with AWS’s Managed NAT Gateway offering, and the vast majority of AWS architecture diagrams contain them. This is no doubt due to AWS’s long-stanging deesire to always promote its own products over competing solutions. While they are absolutely an excellent service, provided that you do your proper due diligence to ensure that that:

- You need an unlimited scalability service that adds 50% to your transfer charges

- You have the correct preventative measures to ensure that excessive traffic doesn’t kill your bill

It has been my experience that many workloads don’t have those factors in play, and might be better served from another solution.

The big issue with Managed NAT Gateways is that they have a cost of about $35/mo/gateway (in the cheaper regions at least), which isn’t too much of a problem for any commercial workload. No, the real issue is how they add costs to external transfers, and can scale an unlimited amount to handle massive amounts of traffic. Couple this with AWS having poor billing alerts and no real cost containment features available, Managed NAT Gateways tend to cause surprise billing spikes when something breaks.

Solution 1: Use your own NAT Gateways

It’s pretty straight forward to make you own NAT Gateway. AWS DOcumentation covers this topic pretty well. These can still allow for surprise billing due to high traffic rates, so you can add some queueing and bandwidth limits to better protect against problems. Here’s a script I have used:

#!/bin/bash

echo 1 > /proc/sys/net/ipv4/ip_forward

INTERFACE=eth0

iptables -t nat -A POSTROUTING -s ${VpcCIDR} -o $INTERFACE -j MASQUERADE

sed -i 's/scripts-user$/\[scripts-user, always\]/' /etc/cloud/cloud.cfg

# At this point, the NAT instance is done. The rest is bandwidth management.

LIMIT=$(printf "%.0fkbit" "$((10**3 * ${MaxBandwidth}))")

LIMIT20=$(printf "%.0fkbit" "$((10**6 * ${MaxBandwidth} * 2))e-4")

LIMIT40=$(printf "%.0fkbit" "$((10**6 * ${MaxBandwidth} * 4))e-4")

LIMIT80=$(printf "%.0fkbit" "$((10**6 * ${MaxBandwidth} * 8))e-4")

tc qdisc add dev $INTERFACE root handle 1: htb default 12

tc class add dev $INTERFACE parent 1: classid 1:1 htb rate $LIMIT ceil $LIMIT burst 10k

tc class add dev $INTERFACE parent 1:1 classid 1:10 htb rate $LIMIT20 ceil $LIMIT40 prio 1 burst 10k

tc class add dev $INTERFACE parent 1:1 classid 1:12 htb rate $LIMIT80 ceil $LIMIT prio 2

tc filter add dev $INTERFACE protocol ip parent 1:0 prio 1 u32 match ip protocol 0x11 0xff flowid 1:10

tc qdisc add dev $INTERFACE parent 1:10 handle 20: sfq perturb 10

tc qdisc add dev $INTERFACE parent 1:12 handle 30: sfq perturb 10

# Set high-priority class and relevant protocols whch uses it

iptables -t mangle -A POSTROUTING -o $INTERFACE -p tcp -m tos --tos Minimize-Delay -j CLASSIFY --set-class 1:10

iptables -t mangle -A POSTROUTING -o $INTERFACE -p icmp -j CLASSIFY --set-class 1:10

iptables -t mangle -A POSTROUTING -o $INTERFACE -p tcp --sport 53 -j CLASSIFY --set-class 1:10

iptables -t mangle -A POSTROUTING -o $INTERFACE -p tcp --dport 53 -j CLASSIFY --set-class 1:10

iptables -t mangle -A POSTROUTING -o $INTERFACE -p tcp --sport 22 -j CLASSIFY --set-class 1:10

iptables -t mangle -A POSTROUTING -o $INTERFACE -p tcp --dport 22 -j CLASSIFY --set-class 1:10

# a couple more tables to tag short, TCP signalling packets correctly

iptables -t mangle -N ack

iptables -t mangle -A ack -m tos ! --tos Normal-Service -j RETURN

iptables -t mangle -A ack -p tcp -m length --length 0:128 -j TOS --set-tos Minimize-Delay

iptables -t mangle -A ack -p tcp -m length --length 128: -j TOS --set-tos Maximize-Throughput

iptables -t mangle -A ack -j RETURN

iptables -t mangle -A POSTROUTING -p tcp -m tcp --tcp-flags SYN,RST,ACK ACK -j ack

iptables -t mangle -N tosfix

iptables -t mangle -A tosfix -p tcp -m length --length 0:512 -j RETURN

iptables -t mangle -A tosfix -j TOS --set-tos Maximize-Throughput

iptables -t mangle -A tosfix -j RETURN

However, you can also run my Budget NAT VPC CloudFormation template to do that for you. It handles 2 AZs and 1 or 2 NAT Gateways, and limits bandwidth to 100Mbps (by default, you can change that) allowing for mistakes to not get too costly.

Solution 2: Use Service Proxies

While the budget NAT VPC template fixes a lot of problems, it doesn’t solve the issue that things on the inside can connect to anywhere on the Internet. You can add restrictions through iptables, of course, but that means translating the DNS addresses everything uses into IPv4 and IPv6 addresses that iptables can handle. This is a non-trivial problem in the general case, and should be avoided. For the budget conscience, using Squid Proxy Server as a web proxy that only allows access to a few websites, solves this problem quite inexpensively, and provides some caching to save further bandwidth costs. You would need to:

-

Create a proxy instance on a public subnet

-

Install Squid

-

Add a text file with the allowed domains on it (

/etc/squid.allowed_domains.txtas an example)windowsupdate.microsoft.com .windowsupdate.microsoft.com update.microsoft.com .update.microsoft.com windowsupdate.com .windowsupdate.com wustat.windows.com ntservicepack.microsoft.com go.microsoft.com -

Configure squid to read a set of allowed domains from a file, and filter for them:

# /etc/quid/conf.d/50-allowed.conf acl allowed_domains "/etc/squid/allowed_domains.txt" http_access allow allowed_domains http_access deny all -

Set instances that need access to these websites to use the proxy server.

Option 3: Nah

For many internal instances that have a very specific set of functions, you don’t need any Internet access at all:

- Use AWS Service Endpoints for AWS services they need like S3, SSM, and DynamoDB.

- Other AWS serevices can be configured to Internal only subnets, or be given endpoints into your private subnets.

- You can mirror updates internally, and also keep a local base-level repo for whatever flavor of OS you’re using mirrored to S3 for easy access.

Hit up the video’s comments if you have any additional info to add, or have your own workarounds.